A bipartisan bill was introduced in the U.S., Tuesday, that would allow victims of “digital forgeries” to pursue penalties against their alleged perpetrators, The Guardian reported.

The Disrupt Explicit Forged Images and Non-Consensual Edits Act of 2024, or the “Defiance Act,” will grant people depicted in sexually explicit or naked “digital forgeries” to pursue civil penalties as restitution from “individuals who produced or possessed the forgery with intent to distribute it,” as well as people who obtained them while knowing it was nonconsensual, according to The Guardian. The measure was reportedly the product of the release of pornographic AI-made images of Taylor Swift on Twitter.

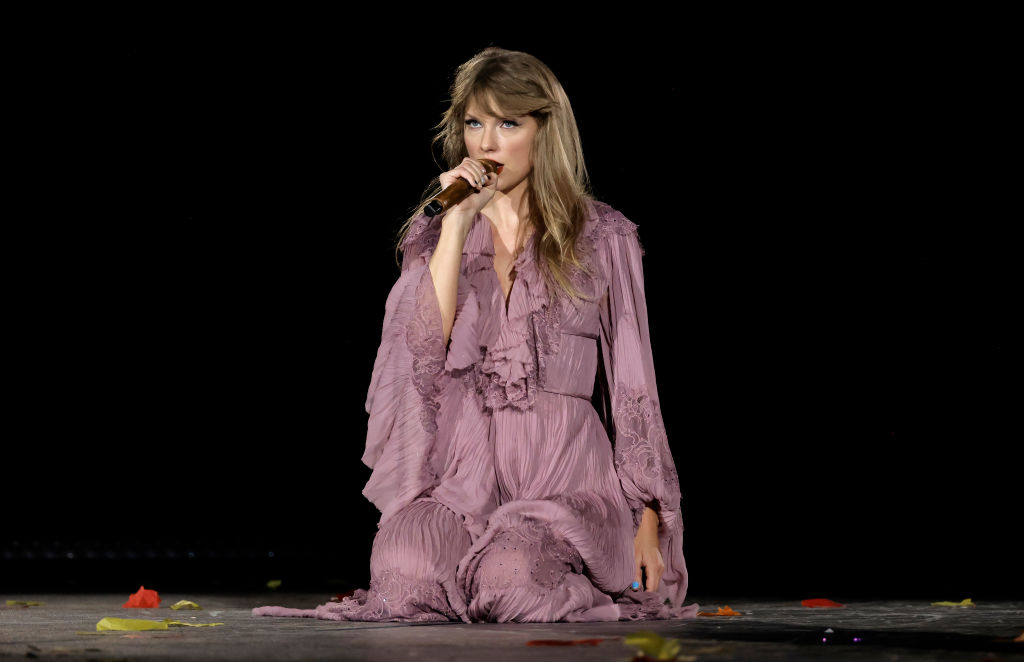

GLENDALE, ARIZONA – MARCH 17: Editorial use only and no commercial use at any time. No use on publication covers is permitted after August 9, 2023. Taylor Swift performs onstage for the opening night of “Taylor Swift | The Eras Tour” at State Farm Stadium on March 17, 2023 in Swift City, ERAzona (Glendale, Arizona). The city of Glendale, Arizona was ceremonially renamed to Swift City for March 17-18 in honor of The Eras Tour. (Photo by Kevin Winter/Getty Images for TAS Rights Management)

Senators Dick Durbin, Lindsey Graham, Amy Klobuchar and Josh Hawley introduced the bill, which was triggered by AI generated, sexual deepfake images of Swift that circulated the last week of Jan. and prompted a total ban on the search of her name on Twitter, The Guardian reported.

The new bill would reportedly make the dissemination of nonconsensual and sexually explicit AI-created images in an effort to put an end to the online circulation of such imagery.

“This month, fake, sexually-explicit images of Taylor Swift that were generated by artificial intelligence swept across social media platforms.” Durbin stated, according to a U.S. Senate Committee on the Judiciary press release. “Although the imagery may be fake, the harm to the victims from the distribution of sexually-explicit ‘deepfakes’ is very real.”

A visitor watches an AI (Artificial Intelligence) sign on an animated screen at the Mobile World Congress (MWC), the telecom industry’s biggest annual gathering, in Barcelona. (Photo by Josep LAGO / AFP) (Photo by JOSEP LAGO/AFP via Getty Images)

The highly sexualized and overly exaggerated images of Swift went viral on Twitter, with the platform’s metrics suggesting tens of millions of views were logged before it was shut down, according to The Guardian.

AI images or videos of real people, known as “deepfakes,” have become a troubling trend on the internet, specifically those that remove a person’s clothing or superimpose their faces over someone else’s, the outlet reported.

“Nobody — neither celebrities nor ordinary Americans — should ever have to find themselves featured in AI pornography,” Hawley stated, according to the press release. “Innocent people have a right to defend their reputations and hold perpetrators accountable in court. This bill will make that a reality.”

The impact of such deepfakes can be devastating and can have long-lasting effects on victims.

OSNABRUECK, GERMANY – MAY 3: A computer game enthusiast plays a computer game during a computer gaming summit May 3, 2003 in Osnabrueck, Germany. About 900 computer gamers from across Germany came to play games such as “Counter Strike,” “Black Hawk Down,” “WarCraft” and “Medal of Honour” in teams across a LAN network for two days. Many gamers are part of clans, groups of 10-30 people, who play each other over the Internet and meet at LAN summits across Germany. (Photo by Sean Gallup/Getty Images)

Haley McNamara, Vice President of Strategy and Communications, National Center on Sexual Exploitation, lent her voice to the conversation in an exclusive interview with the Daily Caller.

“It’s clear that our current federal laws are behind the times when it comes to AI sexual deepfakes or artificial pornography, as well as non-consensually shared sexually explicit images,” she told the outlet.

“Right now, the burden is on victims to discover, find and report their own exploitation — an impossible task as images are re-uploaded across platforms indefinitely.”

McNamara supported the bill, and hoped this would force a change. (RELATED: ‘I Want Your Daughter’: AI Was Creepy Enough Already, But Sean Penn Just Made It A Billion Times Creepier)

“We need legislation to correct that burden imbalance, and require online platforms to do more to proactively detect and remove any form of non-consensual sexually explicit imagery, and for survivors to have access to civil justice,” McNamara told the Daily Caller.